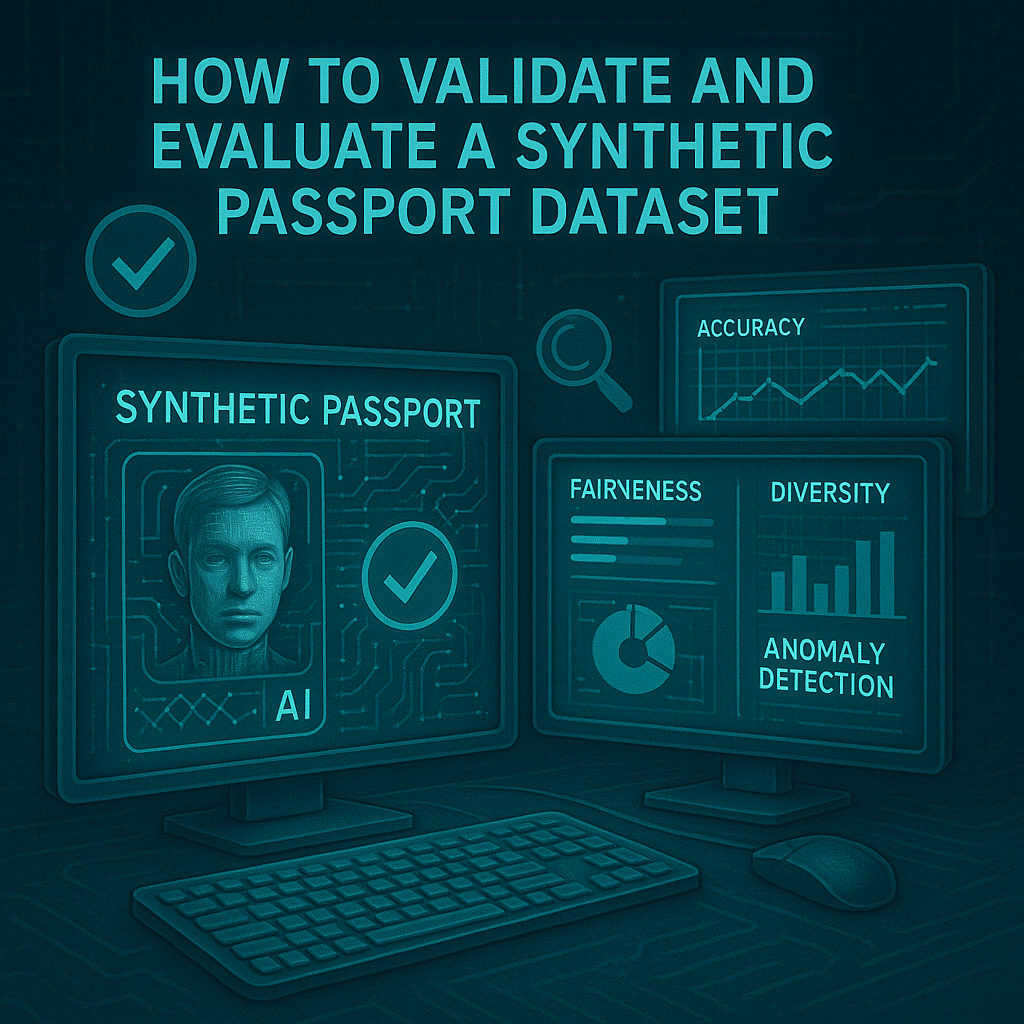

The creation of a synthetic passport dataset is a complex process involving sophisticated generative AI and rule-based systems. However, generating the data is only half the battle. To be truly useful, a synthetic dataset must be rigorously validated and evaluated to ensure its quality, utility, and privacy. Without a robust validation process, a dataset could be flawed, leading to the development of biased or ineffective AI models.

The validation of synthetic data is a multi-faceted process that can be broken down into three core dimensions: Fidelity, Utility, and Privacy. These three pillars form the framework for a comprehensive evaluation, ensuring the dataset meets the high standards required for critical applications like identity verification, biometric authentication, and fraud detection.

1. Fidelity: How Realistic Is the Data?

Fidelity measures how closely the synthetic dataset’s statistical and structural properties mirror those of the real-world data it aims to replicate. High fidelity is crucial because it ensures that the AI model learns the correct patterns and relationships, preventing it from performing well only in a lab environment.

Metrics and Techniques for Measuring Fidelity:

- Statistical Similarity: This is the most fundamental aspect of fidelity. It involves comparing the statistical distributions of features in the synthetic and real datasets.

- Univariate Analysis: Comparing the distribution of individual features (e.g., the distribution of birth dates, name lengths, or the number of characters in the passport number). Common metrics include the Kolmogorov-Smirnov (KS) test and Wasserstein distance, which quantify the difference between two distributions.

- Multivariate Analysis: This goes a step deeper, comparing the relationships and correlations between multiple features. Visual tools like correlation heatmaps are used to compare the correlations between features in both datasets.

- Structural Plausibility: This dimension assesses whether the generated data adheres to the rules and structures of a real passport.

- Document Validation: The synthetic passports must adhere to international standards like the ICAO Document 9303. This means validating the Machine Readable Zone (MRZ) to ensure its check digits and overall structure are correct. Automated scripts can perform these checks on every single document in the dataset.

- Visual Plausibility: A more subjective but critical test. The synthetic passports should look real to the human eye, including subtle details like correct fonts, security features (e.g., holograms and watermarks), and realistic wear and tear. Visual inspection by domain experts is a key part of this process.

- Visual Comparison Tools: Techniques like t-SNE or PCA can be used to visualize high-dimensional data by reducing it to a 2D plot. By plotting both the real and synthetic data on the same chart, developers can visually inspect whether the synthetic data occupies a similar space, indicating that it has captured the underlying structure of the real data.

2. Utility: Is the Data Useful for a Task?

Utility is arguably the most important dimension. It measures whether the synthetic dataset can effectively replace real data for its intended purpose, such as training a machine learning model. A dataset can have high fidelity but low utility if it fails to capture the complex, nuanced relationships needed for a specific task.

Metrics and Techniques for Measuring Utility:

- Train on Synthetic, Test on Real (TSTR): This is the gold standard for utility evaluation. It involves training an ML model exclusively on the synthetic dataset and then testing its performance (e.g., accuracy, precision, recall) on a separate, held-out set of real-world data. The TSTR model’s performance is then compared to a baseline model trained and tested on real data (TRTR). If the TSTR model’s performance is comparable to the TRTR model, the synthetic dataset is considered to have high utility.

- Downstream Task Performance: This metric evaluates the synthetic data’s performance on a specific, real-world task. For a synthetic passport dataset, this could mean training a facial recognition model to perform better with synthetic faces, or a document analysis model to extract data fields more accurately.

- Query-Based Evaluation: This is particularly relevant for datasets that will be used for analytics. It involves running a set of specific queries on both the real and synthetic datasets and comparing the results. For example, a query might ask for the distribution of passports issued in a specific year. If the results from the synthetic data closely match the results from the real data, its utility for analytical purposes is high.

| Metric | How to Measure | Purpose |

|---|---|---|

| Accuracy | Compare the model’s predictions on real data after training on synthetic data. | Measures the model’s ability to make correct predictions. |

| Precision & Recall | Analyze the model’s ability to correctly identify positive and negative cases (e.g., fraudulent vs. legitimate documents). | Important for high-stakes applications like fraud detection where false positives or negatives are costly. |

| F1-Score | A harmonic mean of precision and recall. | Provides a single metric that balances the trade-off between precision and recall. |

3. Privacy: Does the Data Protect Individuals?

The entire purpose of using a synthetic dataset is to protect individual privacy. Therefore, an essential part of validation is to ensure that the dataset is truly anonymous and does not expose any real, sensitive information.

Metrics and Techniques for Measuring Privacy:

- Exact Match Score: This is a simple but critical check. It involves comparing every data point in the synthetic dataset with every data point in the real source dataset to ensure there are no exact copies. The goal is to achieve an exact match score of zero.

- Nearest Neighbor Analysis: This method goes a step beyond exact matches by checking for synthetic data points that are “too close” to a real data point. Using algorithms like K-Nearest Neighbors (KNN), developers can identify synthetic records that are so similar to a real one that they could potentially be used to infer information about the real person.

- Membership Inference Attacks: This is a more advanced technique. A model is trained to determine if a given data point was part of the original training set for the synthetic data generator. If the model can consistently guess correctly, it indicates a privacy risk.

- Differential Privacy: While a privacy-enhancing technique during data generation, it also serves as a key validation metric. Auditing the synthetic data generation process to ensure that differential privacy has been correctly applied provides a high degree of confidence in the dataset’s anonymity.

Related Concepts

The validation and evaluation of synthetic passport datasets are a central part of the broader data science and cybersecurity domains. These processes are deeply connected to a range of concepts and techniques, including:

- Algorithmic Bias (Mitigation through balanced datasets)

- Data Augmentation (A related but distinct technique)

- Machine Learning Operations (MLOps) (Integrating validation into the ML lifecycle)

- Computer Vision (The core field the datasets are used for)

- Data Governance (The policies and procedures for managing data)

- Deep Learning (The technology behind generative AI)

- Data Utility

- Fidelity Metrics

- Privacy-preserving AI

- Synthetic Data Validation

Conclusion: A Foundation of Trust

Validating a synthetic passport dataset is a strategic imperative for any organization building AI-powered security solutions. It’s not a one-time task but a continuous process that should be integrated into the machine learning lifecycle. By focusing on the three pillars of fidelity, utility, and privacy, developers and data scientists can create synthetic datasets that are not just a convenient substitute for real data, but a superior, more ethical, and more secure foundation for building the AI systems of the future. The meticulous process of validation builds trust, ensuring that the AI models trained on these datasets are not only powerful but also responsible and reliable in a world where digital identity is paramount.

FAQs

Validation involves checking realism, diversity, and usefulness for AI model training.

Metrics include accuracy in recognition tasks, dataset balance, and spoof detection performance.

It ensures the dataset produces reliable AI models and avoids bias.

Yes, researchers compare them with standard datasets to measure performance.

AI researchers, security experts, and compliance auditors usually assess dataset quality.