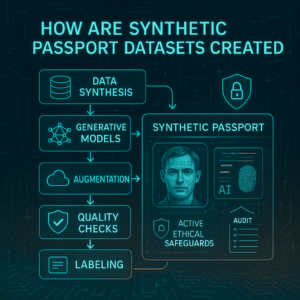

The advent of synthetic data has revolutionized the field of artificial intelligence (AI), offering a powerful solution to the perennial problem of data scarcity. For sensitive applications like identity verification and fraud detection, synthetic passport datasets are a game-changer. By providing a limitless supply of realistic, yet entirely artificial, passport images, they allow developers to train AI models without the ethical and legal complexities of handling real-world personal information.

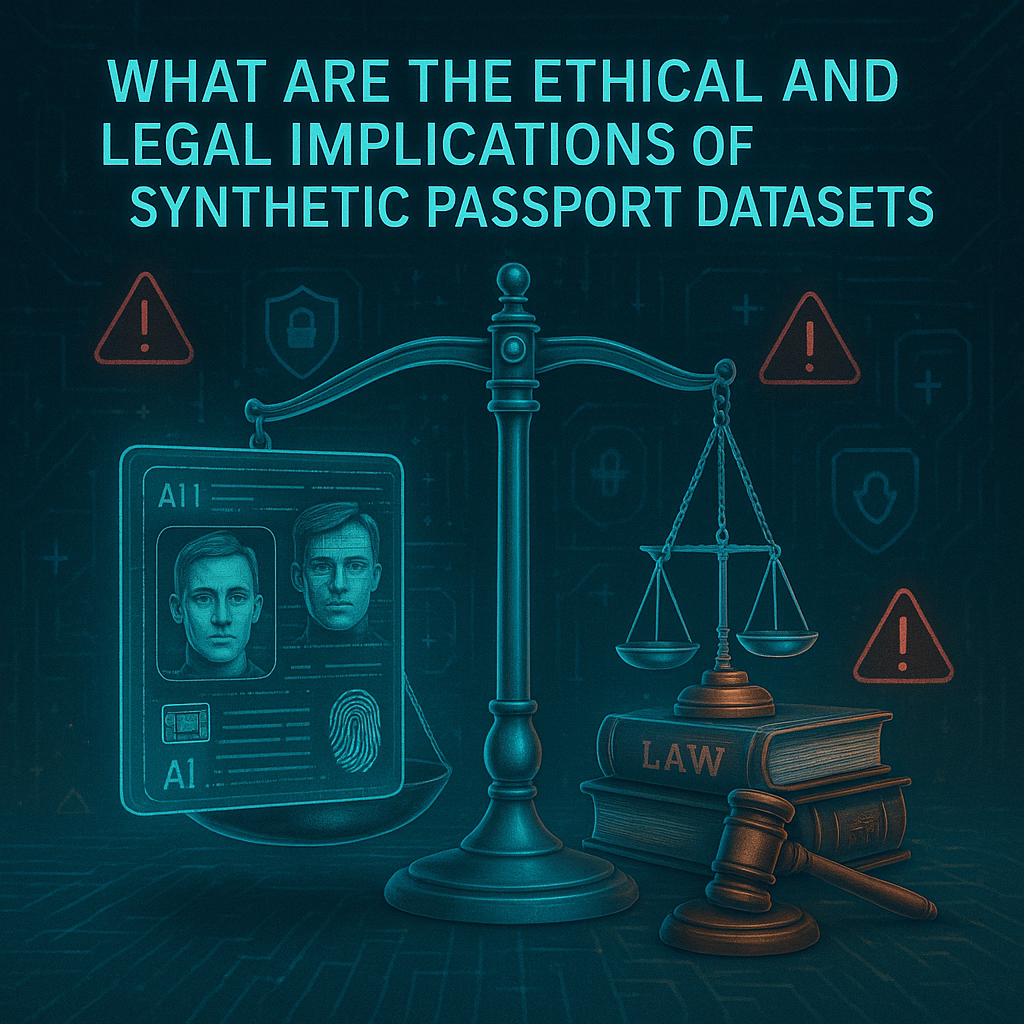

However, while they offer a clear path to privacy compliance, these datasets are not without their own set of ethical and legal implications that demand careful consideration.

1. The Promise: A Solution to Data Privacy Challenges

The primary ethical and legal benefit of synthetic passport datasets is their ability to address data privacy concerns head-on. In a world governed by strict regulations like the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States, obtaining and using real-world passport data for AI training is a legal minefield. A single data breach could result in massive fines and a loss of public trust.

- GDPR and CCPA Compliance: Synthetic datasets are designed to be privacy-preserving by their very nature. Since the data is not derived directly from real individuals, it does not fall under the purview of these data protection laws, as it contains no personally identifiable information (PII). This allows companies to innovate and develop AI systems without the risk of non-compliance.

- Ethical Data Use: Beyond legal requirements, using synthetic data is an ethical choice. It demonstrates a commitment to safeguarding individual privacy and avoiding the potential misuse of sensitive data. Companies can train and test their models in a secure environment, knowing that no real person’s information is at risk.

- Democratization of AI: The high cost and legal hurdles of acquiring real datasets often create a barrier to entry for smaller companies and researchers. Synthetic datasets make valuable training data accessible to a wider community, fostering innovation and democratizing the development of powerful AI tools.

2. The Peril: New Ethical and Legal Hurdles

Despite their clear advantages, synthetic passport datasets introduce a new set of ethical and legal considerations that require a nuanced approach.

- Risk of Re-identification: While synthetic data is designed to be anonymous, there is a theoretical risk of re-identification, especially if the data generation model is not robust. If the generative model “memorizes” patterns from its training data (a real, but perhaps smaller, source dataset) or if it’s not truly random, it could inadvertently create a synthetic data point that matches a real person. This could lead to a privacy breach and undermine the very purpose of using synthetic data.

- Algorithmic Bias: Synthetic data does not automatically solve the problem of algorithmic bias. If the real-world data used to train the generative model is biased—for example, containing a disproportionate number of passports from a specific demographic—the synthetic dataset will likely inherit and even amplify this bias. An AI model trained on such data could then unfairly discriminate against certain groups in its decisions, a major ethical concern in applications like automated border control or job applications.

- Liability and Accountability: The legal landscape for AI is still evolving. When an AI system trained on a synthetic dataset makes an incorrect or harmful decision, who is accountable? Is it the developer of the AI system, the provider of the synthetic dataset, or the end-user? The lack of clear legal precedent creates a liability vacuum. The EU AI Act, for instance, is starting to provide a framework by classifying AI systems based on their risk, but the specifics of liability for synthetic data remain a complex issue.

3. Forgery and Misuse of Technology

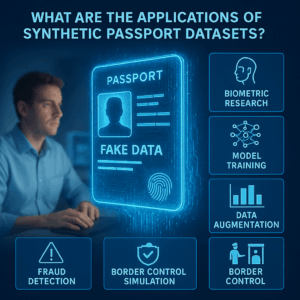

Perhaps the most significant ethical and legal risk associated with synthetic passport datasets is their potential for misuse. The very technology used to create them for legitimate purposes could be co-opted for nefarious ones.

- Facilitating Forgery: The publicly available tools and research papers that detail how to create highly realistic synthetic passports could be used by criminals to generate convincing forgeries. While the intent of these resources is to train AI to detect fraud, the knowledge itself could be weaponized.

- “Deepfakes” and Misinformation: The ability to generate realistic faces and personal information could be used to create “deepfake” identities for malicious purposes, such as social engineering, phishing scams, or creating fake online personas to spread misinformation. This blurs the line between a fictional character and a real identity, making it easier to deceive both humans and automated systems.

4. Legal Frameworks and Best Practices

To navigate these challenges, a combination of legal frameworks, industry standards, and ethical best practices is necessary.

- Legal Clarity: Regulators are beginning to grapple with the legal status of synthetic data. The key legal question is whether synthetic data, even if generated from scratch, can be considered “personal data” if it has the potential to be linked to a real person. As of now, there is no single, universally accepted legal framework.

- Differential Privacy: To mitigate the risk of re-identification, developers can incorporate differential privacy into their synthetic data generation models. This technique adds mathematical noise to the data, ensuring that no single individual’s information can be inferred from the synthetic dataset, even with advanced analytical tools.

- Transparency and Auditability: AI developers should be transparent about their use of synthetic data. This includes documenting the data generation process, the source of any seed data, and the measures taken to prevent bias. This transparency is crucial for ensuring accountability and building trust.

- Responsible AI Principles: The development and use of synthetic data should adhere to a set of responsible AI principles, including fairness, transparency, and accountability. Organizations must not only comply with the law but also operate in a way that serves the public good and minimizes harm.

Related Concepts

- Generative AI

- AI ethics

- Data governance

- Algorithmic fairness

- Privacy-preserving AI

- Data protection laws

- Cybersecurity

- Digital identity

- Machine Learning (ML) training

- Deepfake technology

Conclusion: A Dual-Use Technology

Synthetic passport datasets represent a powerful, dual-use technology. In the right hands, they are a force for good, enabling the development of more secure, private, and equitable AI systems for digital identity verification and fraud prevention. They solve the critical problem of data access, freeing developers from the constraints of sensitive PII. However, their potential for misuse and the new ethical challenges they introduce—from re-identification to algorithmic bias—cannot be ignored. As the technology continues to mature, the focus must shift from simply generating synthetic data to governing its creation and use with a robust legal framework and a strong commitment to ethical principles. This strategic approach will ensure that the future of AI is built on a foundation of trust, not just on a mountain of data.

FAQs

They raise concerns if misused to create fake IDs instead of being used for research.

Yes, when used for research, testing, and AI development, they are legal since they contain no real personal data.

By restricting dataset access, monitoring usage, and following compliance guidelines.

They can if distributed irresponsibly, as fraudsters may exploit them for identity theft.

Guidelines encourage privacy protection, transparency, and responsible AI use.